Lighthouse Pilot Playbook: Prove Impact & Scale What Works

A lighthouse pilot is a short, tightly instrumented experiment that demonstrates value under real constraints. The goal is simple. Pick one or two high-enrollment courses, run a clean study, publish the results, then scale what works across the catalog.

To help stakeholders get aligned on concepts, you can reference your explainers on adaptive learning, personalized learning paths, and AI in education, then anchor decisions on those capabilities.

Pilot charter that leadership will sign

- Problem statement. Name the bottleneck. Example. High DFW rate in first-year Accounting and Biology.

- Scope. Two courses, one term, with specific sections and instructors.

- Primary outcome. Course pass rate or final exam mastery. Pick one main metric.

- Secondary outcomes. On-time submission rate, time on task, midterm score, withdrawal rate.

- Guardrails. Keep content and grading policies constant across groups. Publish a student-facing FAQ.

Deliver a one page charter with timelines, roles, outcomes, and an approval box for the dean and QA lead.

Baseline metrics that make the effect size meaningful

Collect the last two to four terms for the pilot courses. Put baselines in a simple table.

| Metric | Definition | Baseline (mean or rate) | Source |

| Pass rate | Share of students with final grade ≥ passing threshold | 68% | SIS |

| Withdrawals | Share of students who withdraw before deadline | 10% | SIS |

| Midterm mastery | Average midterm percentage | 61% | LMS |

| On-time submission | Share of assignments submitted on time | 74% | LMS |

Formulas you will actually use

- Pass rate difference. Δ = Pass_treat − Pass_control

- Continuous outcome difference. Δ = mean_treat − mean_control

- Standardized effect (Cohen’s d). d = Δ ÷ pooled_sd

State your minimal detectable effect. Example. We will consider the pilot successful if pass rate improves by 6 percentage points and withdrawals drop by 2 points with no harm to midterm mastery.

Control group design that stands up in review

You have three options. Choose the cleanest your context allows.

- Randomized by section. Randomly assign sections to treatment or control. Cleanest and easiest to explain.

- Matched controls. Pair each treated section with a similar section from the same term taught by a comparable instructor. Match on prior GPA, program, gender split, and enrollment size.

- Stepped-wedge. All sections eventually receive the intervention but at different start weeks. Good when you want everyone to benefit while still estimating impact.

Quick power check

For a pass-rate lift from 68% to 74% at α = 0.05 and power = 0.80, a two-proportion test typically needs roughly 300 to 400 students per arm, depending on variance. Use your registrar’s actual section sizes to refine the estimate. For continuous outcomes, a back-of-envelope guide is:

n per arm ≈ 2 * σ² * (Zα/2 + Zβ)² / Δ²

Where σ is the baseline standard deviation and Δ is the smallest meaningful change.

Data collection that does not collapse during midterms

Instrument once, then reuse every term. Keep it minimal and consistent.

Core tables

- enrollments(student_id, section_id, term, program, prior_gpa, baseline_risk)

- assignments(assignment_id, course_id, due_ts, points, type)

- submissions(student_id, assignment_id, submitted_ts, on_time_flag, score_pct, rubric_levels[])

- events(student_id, course_id, event_ts, event_type, time_on_task_sec)

- outcomes(student_id, course_id, midterm_pct, final_pct, pass_flag, withdrawal_flag)

- treatment(student_id, section_id, treatment_flag, start_ts)

Metadata you must lock

- Mapping of outcomes to assessments

- Rubric versions and any grading policy changes

- Instructor roster and TA support hours

Document all of this in a short data dictionary. Use consistent IDs across tables. Add missing-data flags and keep a log of late grade posts to avoid false noise in mid-term dashboards.

Implementation that protects fidelity

Adaptive learning only works if the content actually aligns to outcomes and instructors use it as intended.

- Content alignment. For each outcome, identify the items or activities that target it. Check coverage breadth and depth.

- Release plan. Schedule modules and practice sets before the term begins.

- Faculty enablement. Run a 2 hour hands-on micro-workshop on creating and reviewing adaptive paths. Provide a one pager for office hours scripts.

- Fidelity checks. Weekly report shows. module release adherence, proportion of students assigned to adaptive paths, instructor review rates of system recommendations.

Impact evaluation that is defensible

Pick one primary analysis and one robustness check. Keep it simple and auditable.

- Difference in means or proportions. Compare treatment versus control at the end of term.

- Difference-in-differences. If you have pre-pilot terms, compute (treat_post − treat_pre) − (ctrl_post − ctrl_pre).

- Regression adjustment. Control for prior GPA, program, and baseline risk to improve precision.

- Heterogeneity. Report results for first-year students, working adults, and repeaters. Do not over-slice. Three to five policy-relevant subgroups is enough.

- Missing data plan. Predefine rules for late grades and withdrawals. Use intention-to-treat as the main estimate. Per-protocol can be a sensitivity check.

What to publish on one page

- Primary outcome estimate with confidence interval

- A small table for secondary outcomes

- A forest plot for key subgroups

- A short paragraph on assumptions and any deviations from plan

Scalability plan from pilot to program

Write this as a table the provost can sign.

| Area | What to scale | Threshold to unlock scaling | Owner |

| Academic | Course templates and aligned item banks | Pass rate +6 pp without harm to midterm mastery | Program chair |

| Faculty | Bootcamp and playbooks for new instructors | 80% of new adopters complete enablement | CTL |

| Tech | Content sync, SSO, LTI connections, data pipelines | Error rate <1% across nightly jobs for 4 weeks | IT |

| Ops | Case management and tutoring escalation | Response SLA <48 hours maintained during finals | Advising lead |

| Finance | License and support model | Cost per successful credit earned improves term over term | Finance |

State the limits too. For example. Full campus rollout is paused if weekly job failures exceed threshold, or if pass-rate gains vanish in two consecutive terms.

Risk register and mitigations you can act on

- Instructor drift. New sections skip adaptive activities. Mitigation. Weekly fidelity report and targeted coaching.

- Student overload. Too many practice items. Mitigation. Cap adaptive sets per week and publish expected time on task.

- Data quality. Late grades or missing rubric scores. Mitigation. Grade-posting deadline and automated pings.

- Equity gap. Benefits do not reach working adults. Mitigation. Tailored nudges and time-flexible practice windows.

- Tool fatigue. Multiple systems compete for attention. Mitigation. Use LTI launch points inside the LMS and a single student checklist.

Timeline you can copy

- Weeks 1 to 2. Baseline extraction, charter sign-off, randomization or matching, content alignment audit.

- Week 3. Faculty workshop, pilot section configuration, student FAQ published.

- Weeks 4 to 11. Run of term. Fidelity checks weekly. Midterm mini-review.

- Week 12. Final grading. Data freeze and analysis.

- Week 13. Publish one page results and a short slide for the senate.

- Week 14. Decision to scale, pause, or rerun with adjustments.

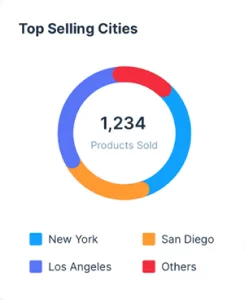

What success looks like on one dashboard

- Primary outcome with confidence interval and target line

- Withdrawals, on-time submissions, time on task trend

- Fidelity panel. module releases on schedule, percent of students assigned to adaptive paths, instructor review rate

- Equity panel. effect by subgroup with guardrails

- Ops panel. pipeline success rate, LTI launches, support tickets

Where PathBuilder fits

- Use adaptive learning to create outcome-aligned practice that adapts to each learner’s mastery profile.

- Guide students with personalized learning paths so they know exactly what to do after each diagnostic.

- Provide faculty with quick authoring and analytics, framed by AI in education resources for formative use.

When you are ready to scope a lighthouse pilot and set up the data feeds, book a structured walkthrough on the About PathBuilder page.

The PathBuilder team is a dynamic group of dedicated professionals passionate about transforming education through adaptive learning technology. With expertise spanning curriculum design, AI-driven personalization, and platform development, the team works tirelessly to create unique learning pathways tailored to every student’s needs. Their commitment to educational innovation and student success drives PathBuilder’s mission to redefine how people learn and grow in a rapidly changing world.