How to Build an Early Warning Nudge System (Higher Ed)

A high performing student early alert system in higher ed is more than a few LMS flags. It is a coordinated service with data pipelines, a rules and scoring layer, human advising workflows, and message playbooks that drive measurable behavior change. Use this blueprint to design, procure, or improve your program.

System architecture at a glance

- Ingest layer. Pull inputs from the LMS, SIS, attendance, tutoring, advising CRM, and financial holds.

- Feature store. Turn raw logs into weekly features such as inactivity streaks, missing work counts, mastery gaps, and grade volatility.

- Decision layer. Two parts: 1) deterministic triggers and 2) a risk score. Use thresholds and a scoring matrix to prioritize outreach.

- Orchestration. A service that opens cases, assigns owners, schedules nudges, and enforces quiet hours and cool downs.

- Advisor console. Queues, student context, recommended scripts, and next best actions.

- Evidence trail. Every alert, message, and call outcome is logged for evaluation and compliance.

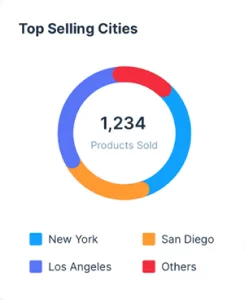

- Warehouse and dashboards. Cohort KPIs, funnel drop offs, and advisor workload.

If stakeholders need a nontechnical primer to level set, link to your explainers on adaptive learning, personalized learning paths, and AI in education, then anchor this project in those capabilities.

Signal engineering that actually predicts risk

Design your signal library with a clear definition, rationale, and lead time so you do not flood advisors.

| Signal group | Concrete feature | Rationale | Lead time | Source |

| Engagement analytics | 5 day inactivity streak, 14 day slope of logins, drop in session length | Activity dips often precede missed work | 3 to 10 days | LMS logs |

| Coursework behavior | Count of overdue items, late submit ratio, quiz attempts per item | Captures friction and avoidance | 1 to 7 days | LMS gradebook |

| Performance | Rolling quiz mean under 65 percent, grade volatility index | Predicts failure or withdrawal | 1 to 4 weeks | LMS grades |

| Attendance | Two consecutive absences in a 3 credit course | Strong stop out predictor | 1 to 2 weeks | Attendance |

| Help seeking | No office hours or tutoring bookings within 7 days of a flag | Signals low activation energy | 1 to 2 weeks | Advising, tutoring |

| Admin blockers | Bursar, registrar, or financial aid holds | Nonacademic barriers | Same week | SIS, finance |

Publish a simple data dictionary and a student facing explainer for transparency. For a reference framework, review the Jisc code of practice for learning analytics.

Trigger design patterns that avoid noise

Start with deterministic rules you can explain. Pair each rule with a suppression and a cool down.

Inactivity plus missing work

If inactivity_streak ≥ 5 days and overdue_count ≥ 1

Then open case Priority 2, assign to advisor, schedule SMS within 6 hours

Suppress for 3 days after any student reply

Early low performance

If week ≤ 4 and rolling_quiz_mean < 65 percent

Then open case Priority 1, schedule call within 24 hours, attach study plan template

Cool down 7 days unless new graded work arrives

Attendance slide

If consecutive_absences ≥ 2

Then send check in email with two time slots and CC instructor

Escalate to call if no response in 48 hours

Back test on two or three past terms. Use confusion matrix style diagnostics to balance sensitivity and precision. Revisit thresholds each term, since course pacing shifts the timing of useful alerts.

Add a risk score, but make it legible

A score helps you triage limited capacity and must be interpretable.

- Baseline. Start with logistic regression on weekly features. Calibrate so a 0.35 risk is roughly a 35 percent failure probability.

- Explainability. Show per student feature contributions in the console. For example, inactivity streak and two missed quizzes drove most of the risk this week.

- Fairness checks. Run subgroup AUC and calibration by program, entry track, or campus. Compare false positive and false negative rates. Adjust features or thresholds and consider group aware calibration where needed.

- Lead time tuning. Include only signals available with enough time to act.

Keep it simple. Your deterministic rules will still do much of the work and are easier to govern.

Prioritization that respects human capacity

Advisors need a queue they can clear. Create a priority score:

priority = 0.6 * risk_score + 0.3 * recoverability + 0.1 * equity_boost

- Recoverability is higher for students early in term with a small mastery gap.

- Equity boost nudges priority for populations your institution intends to support more proactively.

- Set daily caps per advisor. Auto reassign when capacity is exceeded.

Model weekly alert volume before launch. Example: if you expect 300 alerts, average handling time is 12 minutes, and you have 8 advisors with 8 hours each, your ceiling is roughly 256 alerts. You must either raise thresholds, expand staff, or automate first touch nudges.

Playbooks that change behavior, not just send messages

Each trigger maps to a short, testable playbook with channel logic, script variants, and a clear next step. Keep copy concise and supportive.

Inactivity streak, SMS first

- SMS hour 0: “We missed you this week. Want a mini plan to restart. Reply 1 for deadlines, 2 for study plan, 3 to book a 10 minute call.”

- Email hour 24 if no reply: two short time slots and a one page checklist

- Call hour 72 if still no reply

- Close loop with instructor and record outcome

Low quiz average, email first

- Email with two concrete topics and a 15 minute practice set

- Advisor follows with a call offer and books tutoring if needed

- Enroll the student in a micro review inside the LMS and check completion

Ground advisor training in NACADA’s core competencies so outreach links to planning, not just reminders.

Experiment design and evaluation

Treat alerts as an intervention you continuously test.

- Designs. Use randomized A B tests on triggers where feasible. For campus wide policies, run a stepped wedge rollout by department.

- Primary outcomes. Course pass rates and withdrawals for flagged students, compared with matched controls.

- Behavioral outcomes. Engagement recovery within 7 days, assignment catch up within 14 days, show rates for calls.

- Guardrails. Do not let alert volume exceed advisor capacity. Watch for message fatigue and unsubscribes.

- Attribution. Where possible, use uplift modeling to target segments more likely to respond to outreach.

- Reporting cadence. Weekly operational dashboard and an end of term impact review with methodology notes.

Governance, consent, and privacy

Publish a plain language student explainer that covers what you collect, why, how long you keep it, and how alerts work. Align to national rules and institutional policy.

- For the Philippines, ensure compliance with Republic Act 10173, the Data Privacy Act of 2012 and National Privacy Commission guidance.

- Use the Jisc learning analytics code of practice as a reference for transparency, proportionality, and student rights.

- Complete a data protection impact assessment, define roles and access, set retention limits, and audit messaging content each term.

- Document human in the loop decisions. Advisors must be able to override or suppress an alert when context requires.

Rollout plan you can adopt

- Weeks 1 to 2. Pick 4 pilot courses, finalize signals, write 6 playbooks, and configure quiet hours.

- Weeks 3 to 4. Back test thresholds, estimate weekly alert volume, and confirm advisor capacity.

- Week 5. Soft launch with advisors and two departments. Start an A B test on one trigger.

- Week 6. Add two administrative blockers like bursar and registrar holds.

- Week 7. Publish the student transparency page and FAQ.

- End of term. Impact review, fairness checks, and updated thresholds for next term.

Where PathBuilder fits

PathBuilder’s strengths align with this operating model.

- Use adaptive learning to assign targeted micro reviews when a trigger fires.

- Guide students with personalized learning paths and track completion in your advisor console.

- Frame AI supported practice and feedback with AI in education so faculty see how formative hints are created.

To scope a pilot and see how workflows map to your stack, request a structured demo on About PathBuilder.

Templates you can copy

Trigger spec template

- Name, purpose, target courses

- Logic, thresholds, suppression, cool down

- Owner, SLA, escalation path

- Message variants, link targets

- Success metric and review date

Advisor note template

- Student context and top three risk drivers

- Action taken and time spent

- Student response and next step

- Follow up date and case status

Weekly ops checklist

- Alert volume versus capacity

- Top nonactionable alerts and fixes

- Playbooks with lowest response rates

- Data quality exceptions

- Equity and fairness spot checks

The PathBuilder team is a dynamic group of dedicated professionals passionate about transforming education through adaptive learning technology. With expertise spanning curriculum design, AI-driven personalization, and platform development, the team works tirelessly to create unique learning pathways tailored to every student’s needs. Their commitment to educational innovation and student success drives PathBuilder’s mission to redefine how people learn and grow in a rapidly changing world.